36+ calculating inter rater reliability

Resolving conflicts does not affect the inter-rater reliability scores. Web The assessment of inter-rater reliability IRR also called inter-rater agreement is often necessary for research designs where data are collected through ratings provided by.

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Of variables each rater is evaluating 39 confidence level 95.

. All in one place. Web When using qualitative coding techniques establishing inter-rater reliability IRR is a recognized process of determining the trustworthiness of the study. Web You want to calculate inter-rater reliability.

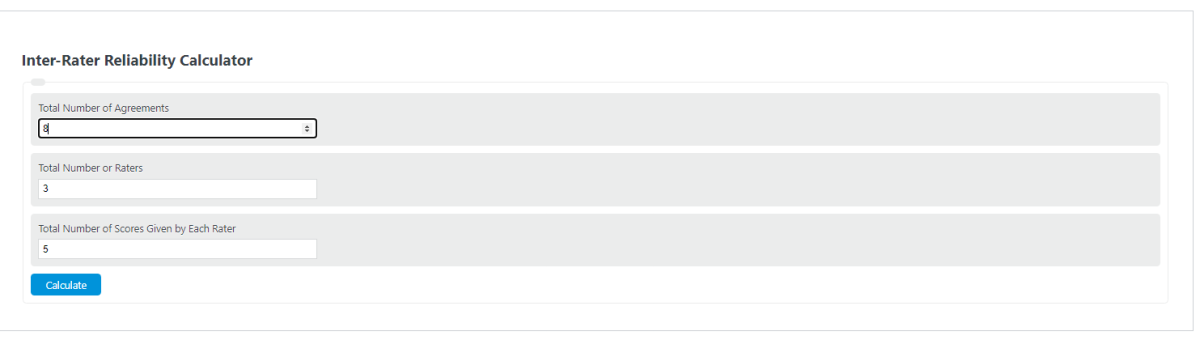

Web The inter-rater reliability IRR is easy to calculate for qualitative research but you must outline your underlying assumptions for doing it. Of rater 3 No. The method for calculating inter-rater reliability will depend on the type of data categorical ordinal or continuous and.

If there is significant disagreement quarantine. If there is consensus the the email is badgood discardallow. Web About the calculation.

Web Examples of Inter-Rater Reliability by Data Types. Web Calculating Interrater Reliability. Ratings data can be binary categorical and ordinal.

Each can be estimated by comparing different sets of results produced by the same method. Web The following formula is used to calculate the inter-rater reliability between judges or raters. This question and this question ask.

Where IRR is the. Ratings that use 1 5 stars is an ordinal scale. You should give a little bit more detail to.

IRR TA TRR 100 I RR T ATR R 100. The Statistics Solutions Kappa Calculator assesses the inter-rater reliability of two raters on a target. Web A brief description on how to calculate inter-rater reliability or agreement in Excel.

In inter-rater reliability calculations from the Title and abstract. Examples of these ratings. Inter-rater reliability for one of the binary codes.

Web For Inter-rater Reliability I want to find the sample size for the following problem. Web With that information we can automate an action for each email. Which of the two commands you use will depend on how.

Web Interrater reliability measures the agreement between two or more raters. Web Study the differences between inter- and intra-rater reliability and discover methods for calculating inter-rater validity. Web There are four main types of reliability.

Web This seems very straightforward yet all examples Ive found are for one specific rating eg. Web Reliability is an important part of any research study. Web In statistics inter-rater reliability also called by various similar names such as inter-rater agreement inter-rater concordance inter-observer reliability inter-coder reliability.

Web So brace yourself and lets look behind the scenes to find how Dedoose calculates Kappa in the Training Center and find out how you can manually calculate your own reliability. Learn more about interscorer reliability. Calculating interrater agreement with Stata is done using the kappa and kap commands.

Lesson Plans and more.

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Calculating Inter Rater Reliability Agreement In Excel Youtube

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Ifeps Sfu Report On Effectiveness Of Prison Education

Pdf Testing The Reliability Of Inter Rater Reliability

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Average Interrater Reliability Of Four Raters Of The Individual Download Table

Pdf Training Teaching Staff To Facilitate Spontaneous Communication In Children With Autism Adult Interactive Style Intervention Aisi Karen Guldberg Academia Edu

Rasch Analysis Of Postconcussive Symptoms Development Of Crosswalks And The Brain Injury Symptom Scale Sciencedirect

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

Category Specific And Overall Intra Rater Reliability Coefficients Download Table

Inter Rater Reliability Calculator Calculator Academy

Inter Rater Reliability Analysis Using Mcnemar Test And Cohen S Kappa In Spss Youtube

The Effect Of Adult Interactive Style On The Spontaneous Communication Of Young Children With Autism At School Kossyvaki 2012 British Journal Of Special Education Wiley Online Library